DocsHound utilizes visual learning AI technology to intelligently identify key moments in product or process demonstrations, automatically capturing relevant screenshots, and applying contextual highlighting without manual editing. It’s a straightforward proposition, but one that has taken several iterations to perfect as we’ve incorporated rapid evolutions among the AI models over the past year.

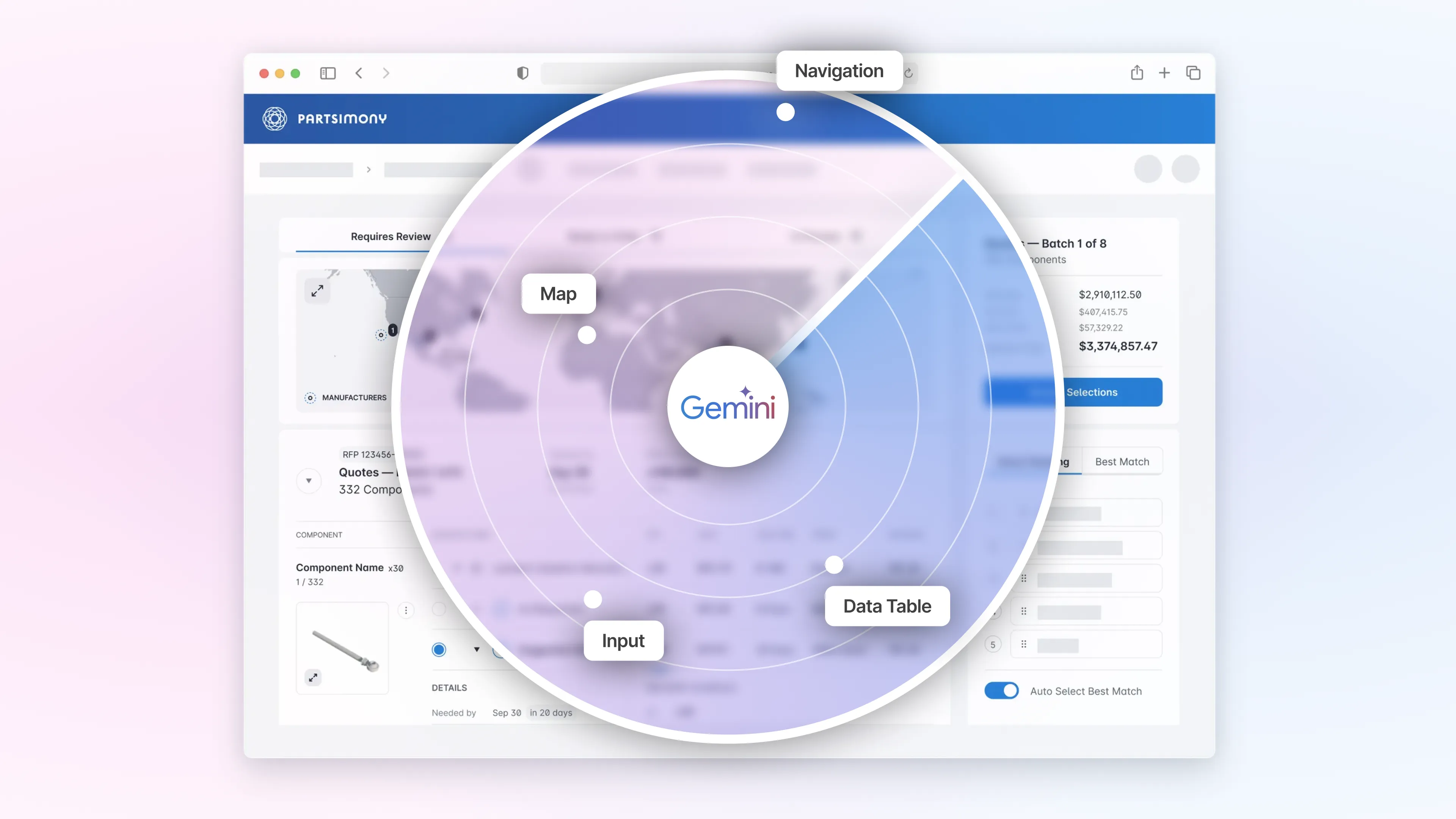

We are excited to report our latest advancements building on Google Gemini Flash 2.5. Compared to other models we’ve worked with, Gemini 2.5 multimodal object detection capabilities have raised the bar on DocsHound’s accuracy throughout the process of automatically analyzing screen share videos to identify key steps in the interface, generate screenshots, identify relevant UI elements, and use that analysis to feed rich context to assistive agents that build documentation as our customers demo their applications.

To meet customer needs, however, DocsHound needs to be both accurate and fast. Fortunately, latency time is another massive improvement with Gemini. Gemini Flash and Flashlight are extremely fast and very high quality specifically for the vision summarization tasks that are core to DocsHound (e.g., describe a series of interactions with this user interface in detail).

When we started building DocsHound a year ago, we were using small models such as GPT 3.5 Turbo. It was lightning fast, but the output was also full of errors and hallucinations. It would demo extremely well, but when we actually tried to use it as a day-to-day tool, it was a hindrance sorting through what was accurate and then editing out the errors manually. As we continued to develop the product, we ran tests with different models and continued to refine our prompts, focusing on accuracy and non-hallucination. We now generate very accurate articles; however, predictably, the time to generate articles also went way up.

So latency has most recently been an area we have been specifically working to improve. This is an issue common to all generative AI tools, and the common design solution for tasks that take more than a few seconds is to create a user experience where you see the tool “thinking” and the output building in real time. We’ve followed that pattern with DocsHound as well. But we reached the point where there were no other UX tricks to introduce to cover the latency, and nothing else in our infrastructure to optimize to speed up results. The biggest choke point became the small models themselves.

With hundreds of thousands of documents generated on DocsHound to date, our evaluation sets now allow us to benchmark the throughput of specific models and evaluate performance across the dimensions of latency, quality, and accuracy. Testing with Gemini is showing the strongest results among all of our current providers. In our beta testing this month, switching to Gemini has seen the time to generate fall from minutes to seconds. We’re now deep testing Gemini across our agents, and if our beta research continues to give strong results, we plan to cut over to production shortly.

In our roadmap, we plan to use Gemini’s multimodal object detection capability for automatically detecting nuanced user interface elements. That is a big challenge that nobody has really cracked yet compared to typical training sets for machine learning on video, because the screen is so similar from frame to frame. We are seeing success with that in our current testing.