Let me tell you how comically easy it is to build an AI chatbot:

- Take your documentation

- Select all of it

- Put it in ChatGPT

- Write two dashes

- Start asking questions

That is it. That is the entire process.

Well, you also need an interface, integration into your ecosystem, and other elements to make it truly useful - but the AI component powering it is, at a base level, not all that magical.

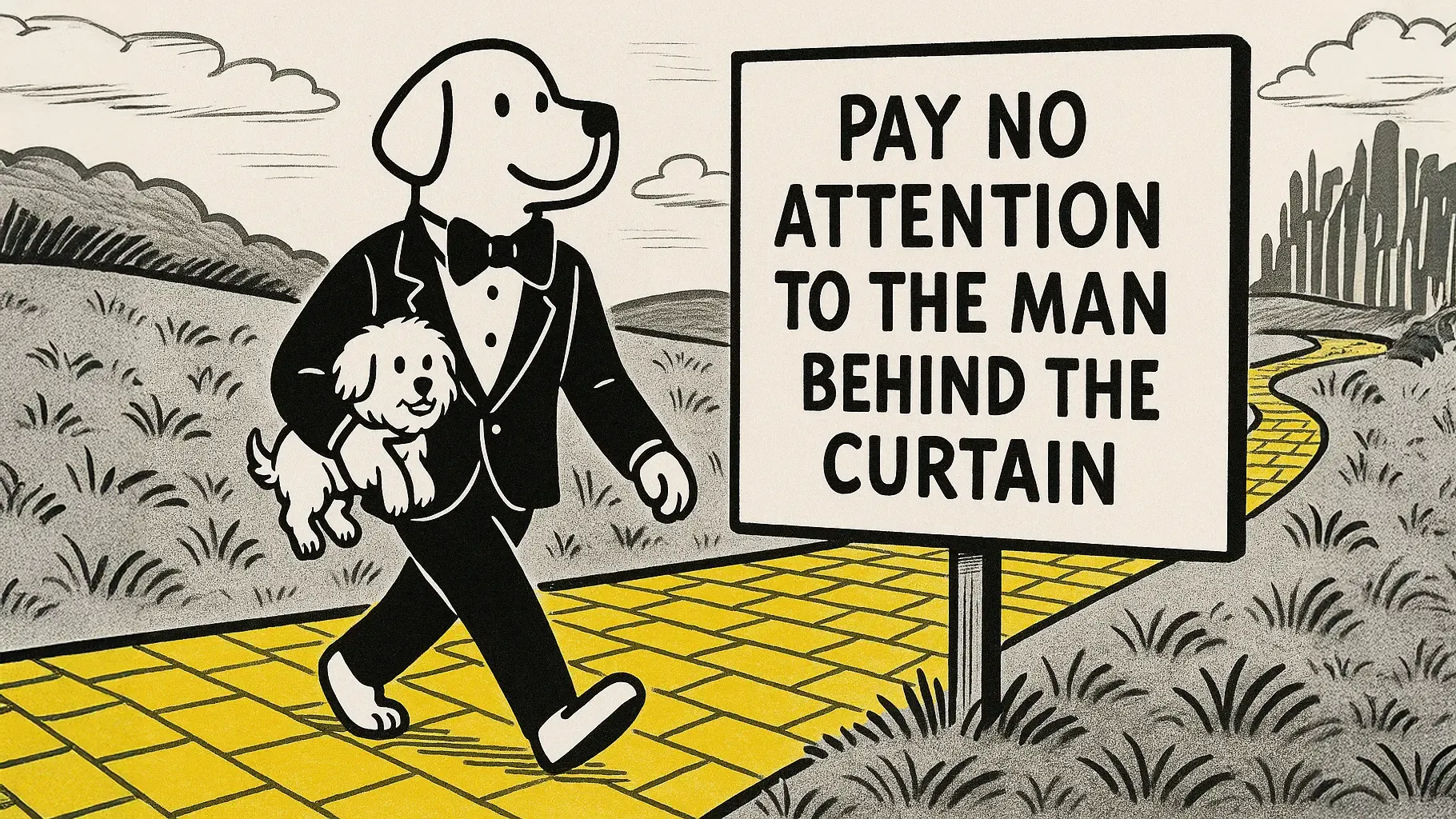

For all the chatter about RAG, vector databases, and neural embeddings, there is an uncomfortable truth:

The extraction and codification of knowledge into machine-queryable formats is no longer the insurmountable technical challenge it once was. Modern frontier models possess remarkable capabilities for information retrieval without complex architectures mediating the process.

Or, in plainspeak: you can literally paste your docs into ChatGPT and get good answers. But do you even have the docs in the first place?

Which gets us to the catches and what is actually hard:

- If your documentation is terrible, your chatbot will be terrible

- You need documentation in the first place

- You need a nice interface integrated into your product and operational workflow

RAG to Riches

RAG does still have legitimate use cases - when your content truly exceeds even modern context windows, when connecting to live data, or when integrating multiple disparate sources. But why are you integrating with multiple disparate data sources in the first place? More data ≠ better if a huge chunk of it is bunk or immaterial to the task at hand.

Connecting your entire Google Drive, Notion workspace, and Slack history is precisely the wrong approach. You may have 10 facts surrounded by 50 statements that say the opposite, it was written by people who have since left the company, and is full of contradictions and gaps. It may even have information that you absolutely do not want to be customer facing.

Nobody has time to spend cleansing notes that were written with the intent of addressing the challenge of the day—vs. powering your future AI.

You can have the best vector search in the world, but ultimately all it is doing is grabbing bits of text and putting them in a prompt. If those bits of text do not exist or they say different things, it is not going to work very well.

It is like onboarding a new employee by saying “go check out our Google Drive and Slack history” without allowing them to ask questions, then expecting them to perform perfectly two hours later.

We have not reached artificial general intelligence yet - so why would we expect AI to navigate your information chaos better than an exceptionally bright human could?

There is a decent chance that rather than getting Accenture and 15 analysts to implement your RAG system, you would be better off clicking through 10 parts of your app with DocsHound and creating proper documentation.

How We Got Here

In late 2023, when GPT-4 Turbo was released, we saw a frontier model with a context window capable of handling 128,000 tokens - enough for hundreds of pages of documentation. This changed everything.

At DocsHound, we were building our first iteration when this happened. We had been working with RAG systems because they were necessary with smaller context windows. It required more overwrought documentation than a human would need.

No matter how much easier DocsHound made it to create documentation, it still required excessive effort.

It felt like creating documentation for a search engine - because essentially, we were.

When we could suddenly feed entire documentation sets directly into the model, the results were surprisingly good but very expensive at that time.

Today, the frontier models have become increasingly efficient without losing any noticeable smarts.

The only trend on cost has been that it is going down. A full context approach is economically viable.

Today’s models can handle enormous amounts of context. GPT-4.1 processes up to 1 million tokens, Claude 3.7 Sonnet manages 200,000 tokens, and Google’s Gemini 1.5 Pro-002 pushes beyond 2 million tokens. This means a model can hold your entire product documentation, support articles, and tutorials all at once and in context.

This is why DocsHound charges close to nothing on a per-response basis. While some companies will charge a dollar per successful response, and get to decide themselves whether the response was successful, we take a different approach.

Why DocsHound Then?

DocsHound has a great chatbot, and so do others. In fact I could say it is the best, and it would not matter—there are plenty other solutions with a compelling interface and an in-context approach.

What makes us different is that we actually make it easier to create the content in the first place.

Our Chrome extension lets you generate documentation by simply using your product while our AI watches and learns. Every explanation you give, every workflow you demonstrate becomes part of a connected knowledge system that actually makes sense. We process all your screenshots through vision language models, making every visual element queryable alongside text.

We do not believe people want to learn to be prompt engineers. Which is why, with DocsHound, it’s built in. We have fine-tuned our instructions to ensure your chatbot gives concise, accurate answers instead of verbose, essay-like responses.

This is true of the AI generated docs themselves.

In fact, we do not believe that customization is even necessary.

For all the marketing gurus crafting ‘on-brand support experiences’ – the rest of us living on planet Earth just want a straight answer when something breaks. Nobody thinks about your brand tone while trying to “do X”. They want the solution, not your company’s philosophical manifesto on customer interaction.

As we demonstrated with our IRS experiment, this approach works even with massive amounts of complex information. We put in over 50 IRS publications - essentially the entire tax code - and the system handled it beautifully. Is your product or process documentation really more complicated than the entire United States tax code? Probably not.

You could learn to do all this yourself, of course. But you probably have a business to run.

Try It Yourself

Today, I can get a support ticket through our bot, pop open our extension, click 5 times while narrating, share the link to the customers and in doing so I have created a knowledge asset that will extend into the future. In minutes.

If you want to see this in action, click through your workflow in DocsHound, then try asking our chat a question.

Information retrieval is dead simple with AI. The extraction and structuring of that information is the real challenge. That is what DocsHound solves.